LieBN on SPD Manifolds: The Additional Details and Experiments That You Don't Want to Miss

28 Feb 2025

We use the official code of SPDNetBN and TSMNet to implement our experiments on the SPDNet and TSMNet backbones.

LieBN as a Natural Generalization of Euclidean BN, And Backpropagation of Matrix Functions

28 Feb 2025

The centering and biasing in Euclidean BN correspond to the group action of R.

Basic Layers In SPDNET, TSMNET, and Statistical Results of Scaling in the LieBN

27 Feb 2025

SPDNet mimics the conventional densely connected feedforward network, consisting of three basic building blocks

Proposing A Novel Framework Called LieBN: A Conclusion

27 Feb 2025

This paper proposes a novel framework called LieBN, enabling batch normalization over Lie groups.

A Lie Group Approach to Riemannian Batch Normalization: Experimental Results

27 Feb 2025

For each family of LieBN or DSMLieBN, we report two representatives: the standard one induced from the standard metric (θ = 1)

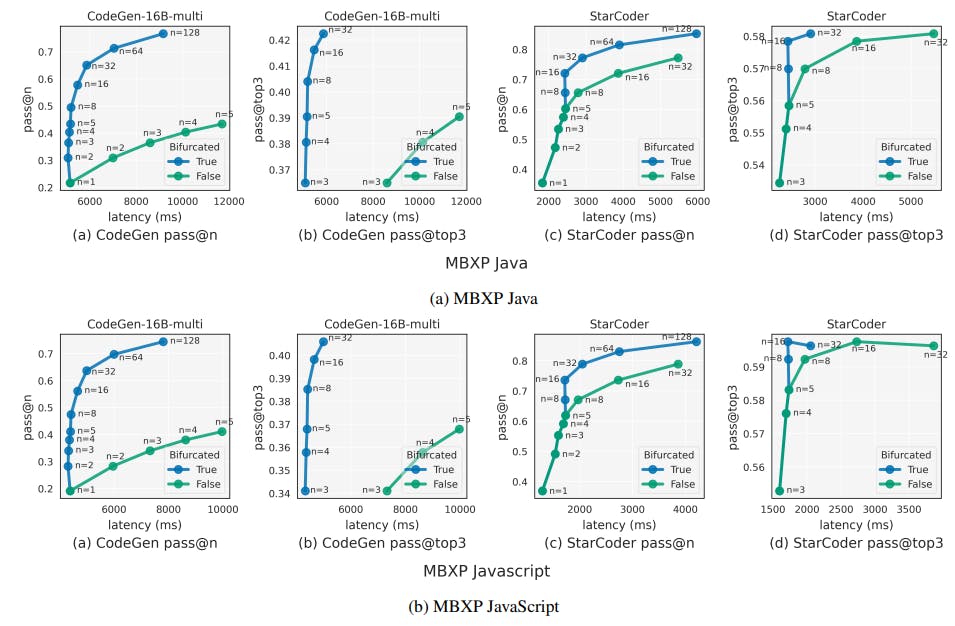

Smarter AI Code Completion with Memory-Efficient Techniques

26 Feb 2025

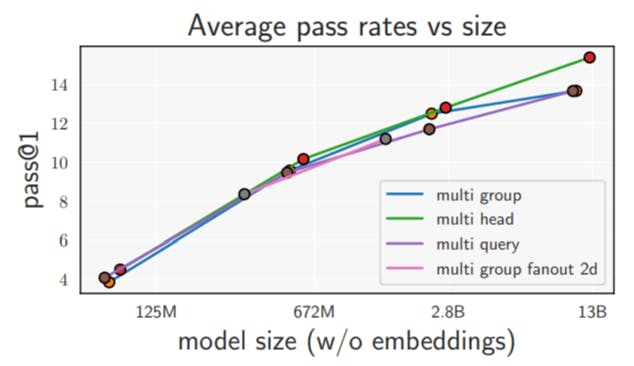

New research shows bifurcated attention improves AI efficiency across Java, JavaScript, and Python, while speculative decoding further reduces memory bottleneck

Reducing Memory Overhead in AI Models

26 Feb 2025

Discover how bifurcated attention boosts AI efficiency by reducing memory I/O costs and simplifying implementation with just 20 lines of PyTorch code.

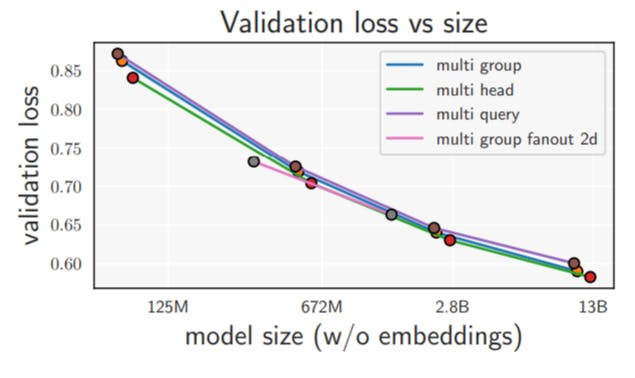

Understanding Multi-Group Attention in AI Models

26 Feb 2025

Explore multi-group attention, memory IO costs, and FLOPs in AI models. Learn how incremental decoding and context encoding impact AI performance.

How We Implemented Our Three Families of LieBN to SPD Neural Networks

26 Feb 2025

In this section, we implement our three families of LieBN to SPD neural networks.